On Monday 8th April, the Section conducted a virtual meeting via Zoom where Game Audio educator Nick Harrison presented on the topic of:

Integrating Audio into the Gaming Experience

Software Packages and Workflows.

After a brief introduction from Section Chairman Graeme Huon, Nick started his presentation with an Acknowledgement of Country to the Wurindjeri Woi Wurrung people of the Kulin nation – acknowledging Elders past, present and emerging. He also took a moment to mention the passing of Uncle Jack Charles and Uncle Archie Roach in 2022, two significant First Nations storytellers, musicians and truthtellers.

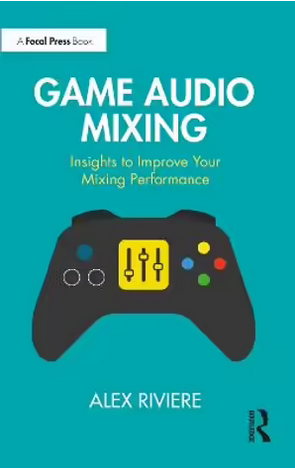

Nick then moved on to introduce game engines like Unity and Unreal, and the game audio implementation options of either using the game engine built-in audio tools native implementation or by using middleware such as FMOD and Wwise.

Nick briefly covered a GameSoundCon 2023 Music and Sound Design Survey which showed that for AAA studios, Wwise use eclipsed all other options, but for Indie titles FMOD was used the most, however, Wwise still had a significant presence. The use of engines’ built-in tools was an insignificant minority, particularly for AAA studios.

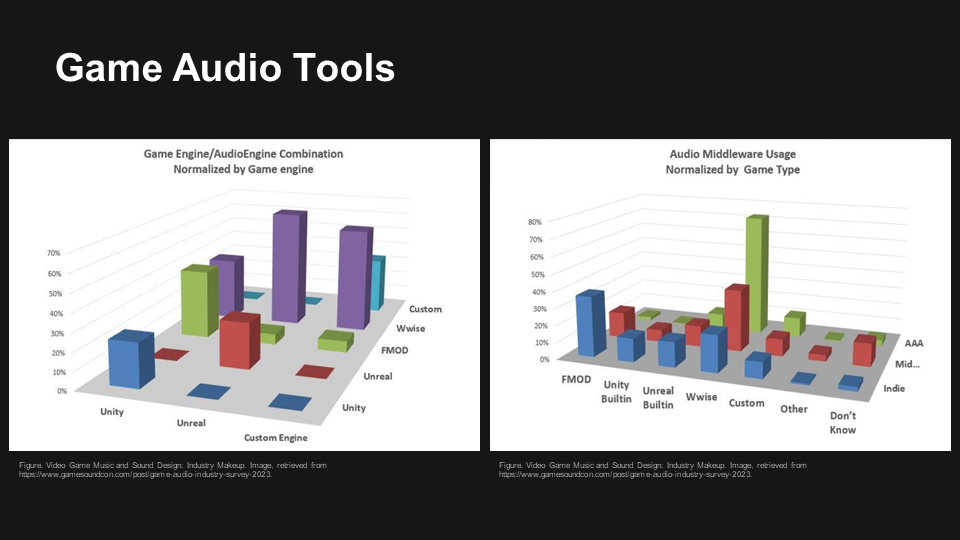

He then showed the education levels of professionals in the industry, pointing out that the survey indicated a majority held degrees in a musical or related discipline.

Nick then demonstrated adding sounds to a game in development using the Unity engine, covering the concept of min/max distance and played a video demonstrating min/max distance and on axis/off axis sound.

This was followed by a short video demonstrating the use of an inertial measurement unit (IMU) to display movement in the x/y/z planes, and Nick described how that can be used to track head movements. He then played another video demonstrating a VR simulation he had created of an analog mixing console (SSL K Series), as a potential tool for audio students.

Using a different style of project, Nick further described creating audio within the Unity engine, and demonstrated adding sounds and locating them in the environment.

Nick then showed us how he was now using AI Large Language Models (LLMs) such as ChatGPT or Claude to check and improve his code.

Within the demonstration game structure, Nick then showed the code needed for a simple feature – to program a set of placed proximity-sensitive frogs that ‘croaked on approach’ with random timing and pitch. The lines of code would have taken over half an hour to create ‘by hand’. Nick used the LLM ‘Claude’ to create the code in seconds from a simple text prompt he entered as we watched.

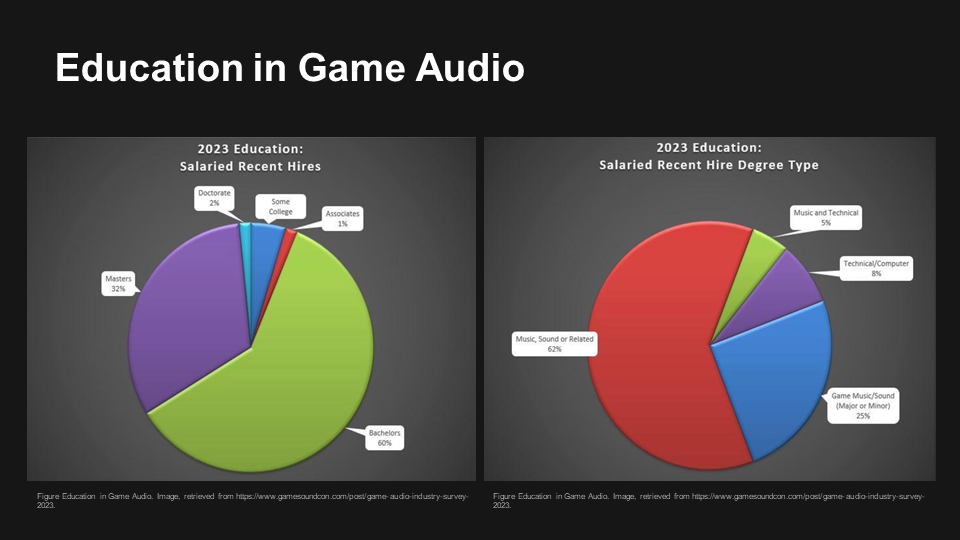

Nick then demonstrated using the Wwise middleware on a project, showing adding sounds to specific events, then adjusting parameters such as pitch, then explaining the implementation workflow.

He showed the effect of events as defined in Unity triggering the sounds defined in Wwise, and the role of trigger boxes and reverb zones.

After a brief mishap with Nick’s computer which took him offline for a few minutes, he returned to wrap up the session with a short Q&A session.

Here’s an edited video of the Zoom session:

This video can be viewed directly on YouTube at:

https://youtu.be/6mKx3JuZDcY

A PDF of Nick’s slides is available at:

https://www.aesmelbourne.org.au/wp-content/media/NickHarrisonGameAudioApr24.pdf

Related Links:

Additional Reading –

https://www.booktopia.com.au/game-audio-mixing-alex-riviere/book/9781032397351.html

Game Audio Industry Music and Sound Design Survey 2023

https://www.gamesoundcon.com/post/game-audio-industry-survey-2023